Introduction

In this topic, we will discover what is a compiler, how it works, and what are the types of compilers.

Don't skip this topic, because it's crucial to understand the basics of compilers, before learning about more advanced topics.

What is a compiler?

Compiler is a computer program that translates source code written in one programming language (the source language) into another language (the target language).

Programming language can be any language, including assembly language, machine code, HTML or even English. It's just a formal way to describe a set of instructions.

Usage of compilers

Compilers are used in many different areas, including not only programming languages, but also web browsers, databases, and even hardware.

Examples

Web browsers use JavaScript engines like V8 (used in Chrome and Edge) and SpiderMonkey (used in Firefox) to compile JavaScript code into machine code. This process happens just-in-time during execution, allowing for efficient processing and improved performance of web applications.

Interpreters are used in databases to compile SQL queries to handle them efficiently.

Verilog programmers use compilers to compile hardware description languages to machine code

Programmers use compilers to compile source code from programming language to executable files.

Types of compilers

There are few types of compilers, which have different approaches to the compilation process.

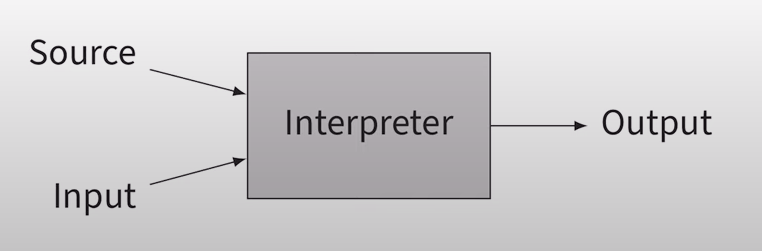

1. Interpreters

Interpreters are translators that reads in source code statement by statement and executes them directly.

It's usually a string of text, which is parsed into a sequence of tokens by lexer.

Interpreter reads in command line arguments and input from the user.

Like Python's input() function.

Interpreter prints output to the console.

Like Python's print() function.

e.g.: Python, JavaScript, Shell

(most common case usage is in command line tools and REPLs).

Interpreters are easy to implement, but they are usually slower than compilers.

It makes them very efficient only when only one statement is processed at a time.

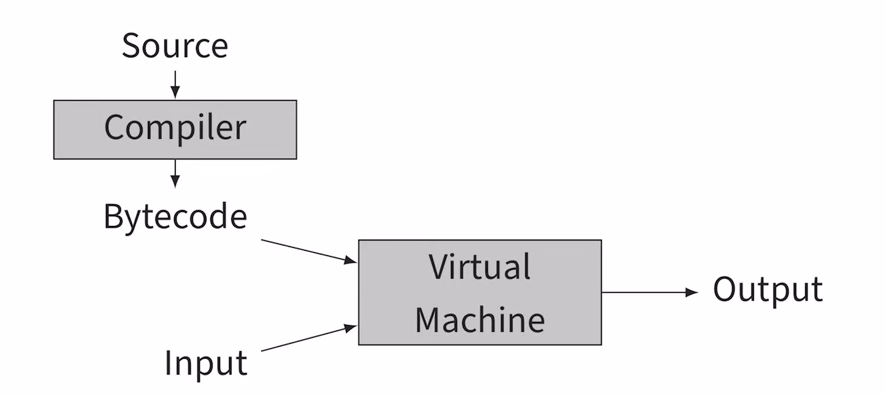

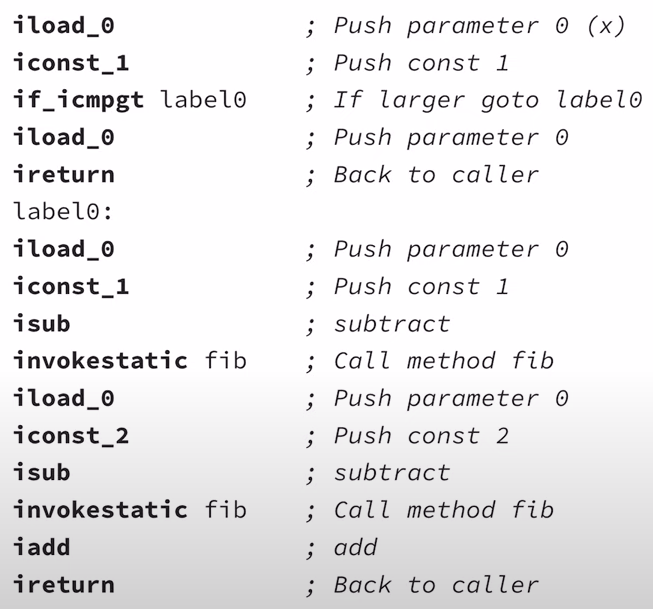

2. Virtual machines

Virtual machines are compilers that reads in source code and translates it into an intermediate representation, which is then executed by a virtual machine.

I think that virtual machines are most commonly used for cross-platform support.

It's usually a string of text, which is parsed into a sequence of tokens by lexer.

It's a sequence of intermediate instructions, which is executed by a virtual machine.

Virtual machine reads in command line arguments and input from the user.

Like Java's Scanner class.

Virtual machine prints output to the console.

Like Java's System.out.println() function.

e.g.: Java / Kotlin, Python

Bytecode looks like a machine code, but it still has a high-level constructions, like if statements, method calls, etc.

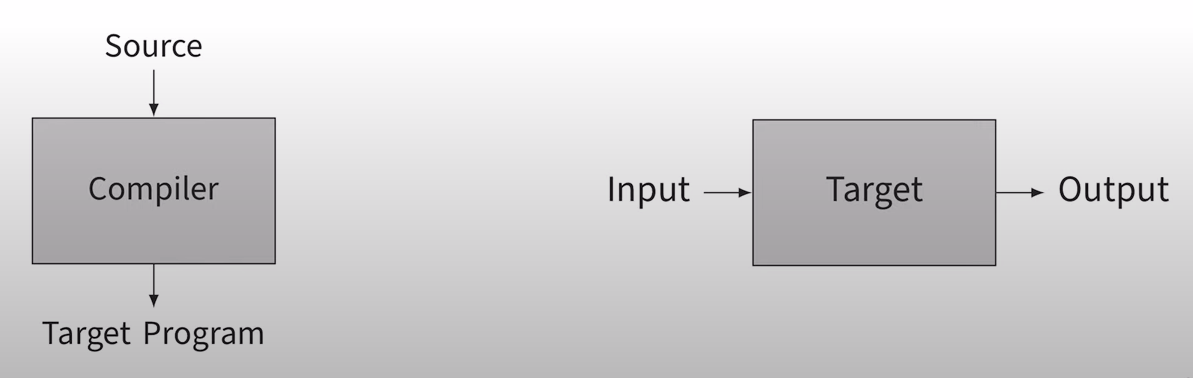

3. Standard compilers

Standard compilers are translating source code into machine code, which is then executed and can interact with runtime environment.

It's usually a string of text, which is parsed into a sequence of tokens by lexer.

It's a sequence of machine instructions, which is executed by some specific hardware.

Targets: x86, ARM, MIPS, WebAssembly, etc.

Target program reads in command line arguments and input from the user.

Like C's scanf() function.

Target program prints output to the console.

Like C's printf() function.

This kind of approach, when compiler is compiling source code into machine code before execution, is called ahead-of-time compilation.

4. Just-in-time compilers

Just-in-time compilers have appeared relatively recently and have become very popular. They compile during program execution, which makes them similar to interpreters.

e.g.: PyPy, Java, JavaScript, WebAssembly, LLVM, etc.

(nowadays, most of the languages support JIT compilation).

In difference from interpreters, JIT compilers don't execute source code directly. Instead, they compile them first and then execute as a machine code.

5. ButterSus's metalanguage compilers

I have an idea of a compiler, which will be able to change language itself. It's called metaprogramming language.

Nowadays, there are a lot of languages which partially support metaprogramming, like C++ with its templates, and constexpr functions, or Rust with its macros.

But I want to create a language, which will be able to fully support changing syntax and semantics, compile-time custom code generation, etc.

Therefore, as its main purpose is to create some domain-specific languages very easily, it should also support easy IDE integration.

To do so, compiler should be able to work as a language server using compile-time concept: dynamic syntax highlighting, code completion, code navigation, refactoring, warnings and errors (static analysis)

Frontend can be overriden by the user using some kind of Backus-Naur form. I'm not sure, but there should be strict rules, defined by OOP principles, in order to structure AST correctly.

All backend stuff should be implemented in a language itself. It means all logic that generates and optimizes code should be written in a language itself. It will allow to easily extend/override the language.

There are some examples: Meta II, MPS, Meta Cedille, Language workbench

Compilation process

In modern days, compilers are usually used in a pair with other tools, which makes the compilation process more complicated.

Let's take a look at the compilation process of two different languages: C and Java.

C was written in 1972, when compilers were used in a completely different way.

So, C was designed to work with assembly language and low-level environments, like UNIX.

Most of the approaches, which were used in the past, are mostly deprecated nowadays.

Java was written in 1995, but it's still used as a modern language.

It's not necessarily to use bytecode and JIT compilation, but it's a common approach nowadays.

Goals of compilers

Originally, compilers were designed to translate source code into machine code, basically translating program from language S to language T, while preserving its semantics and thereby implements S in T.

Good compilers should also meet the following criteria:

Correctness: The compiler should not change the meaning of the program. (Like any performed optimizations should not change the behavior of the program.)

Efficiency: The compiler should produce code that is as efficient as possible, meaning that it mostly should use less time and memory.

Low compilation time: Not necessarily, but it's better to have a fast compiler. For example, it's crucial to have linear time complexity.

Compatibility: The compiler should be compatible with the other compilers and tools, which users use (like IDEs, debuggers, etc.).

Integration: Good compiler will provide a good support for developers: nice error messages, debugging tools, etc.

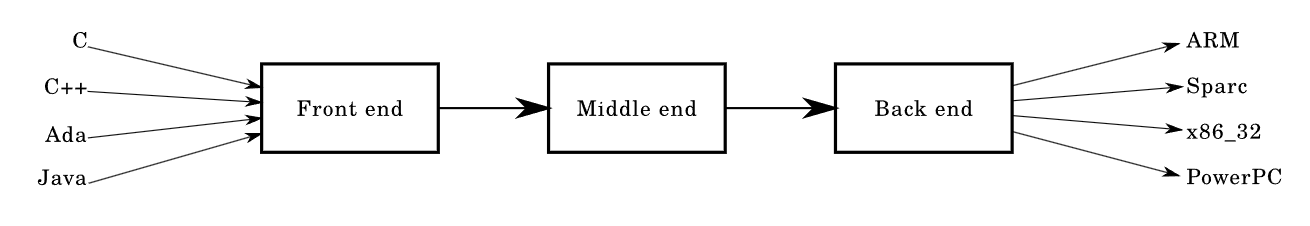

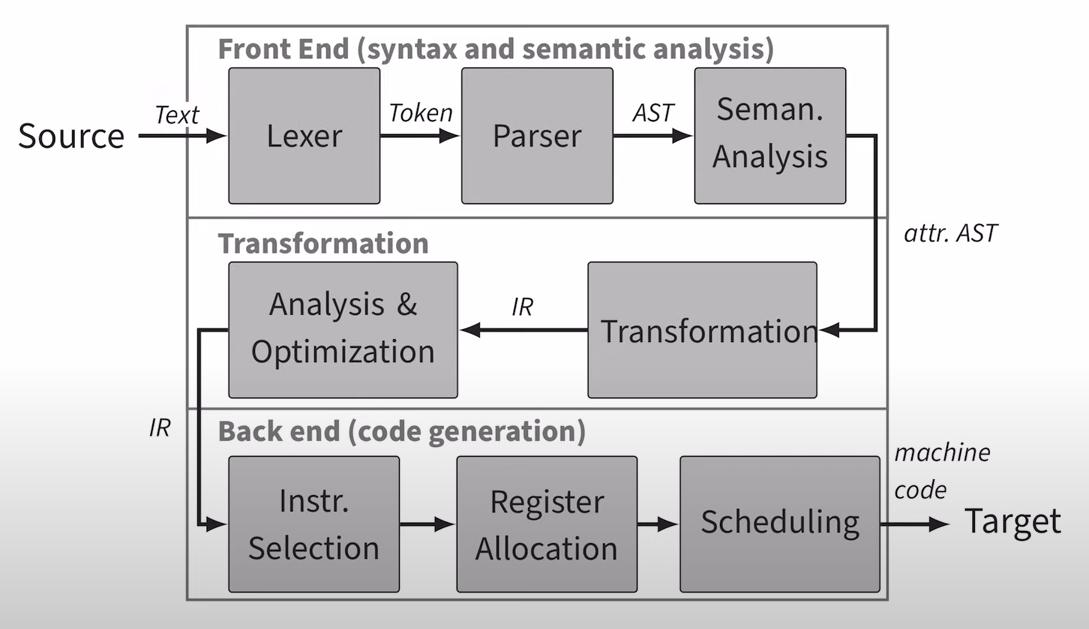

Structure of compilers

It's nice to have a clear structure of compilers, which will help us to understand how they work.

Of course, not all of these steps are required, each one can be implemented in a different way.

It's worth to mention, that each step can raise an error.

Frontend is responsible for the analysis of the source code.

It includes:

Lexical analysis (Lexing): It's a process of converting a sequence of characters into a sequence of tokens.

Syntax analysis (Parsing): It's a process of analyzing the syntax of a program and building an abstract syntax tree.

Semantic analysis (Analysis): It's a process of analyzing the semantics of a program and building a symbol table.

Each of these steps is usually independent of each other.

It's called front-end, because it's the only part of the compiler, which affects the syntax and semantics of the program.

Middle end (Transformations) is a relatively new part of the compiler, which is responsible for the transforming of a high-level representation into a low-level representation.

As a result, it's much easier to perform optimizations.

Next stages can be done by external tools, such as LLVM.

Backend is responsible for the generating of the target code. It includes:

Instruction selection: It's a process of selecting instructions depending on the target architecture.

Register allocation: It's a process of allocating registers, which usually requires a lot of analysis. (It's a very complicated topic, as nowadays memory access is much, much slower than CPU operations.)

Instruction scheduling: It's used for modern CPUs, which have a lot of pipelines, like out-of-order execution, branch prediction, cache memory, etc.

Keep in mind, that backend has lots of optimizations, which are sometimes private and not documented. And instead of making backend on your own, it's better to use some existing tools, like LLVM.

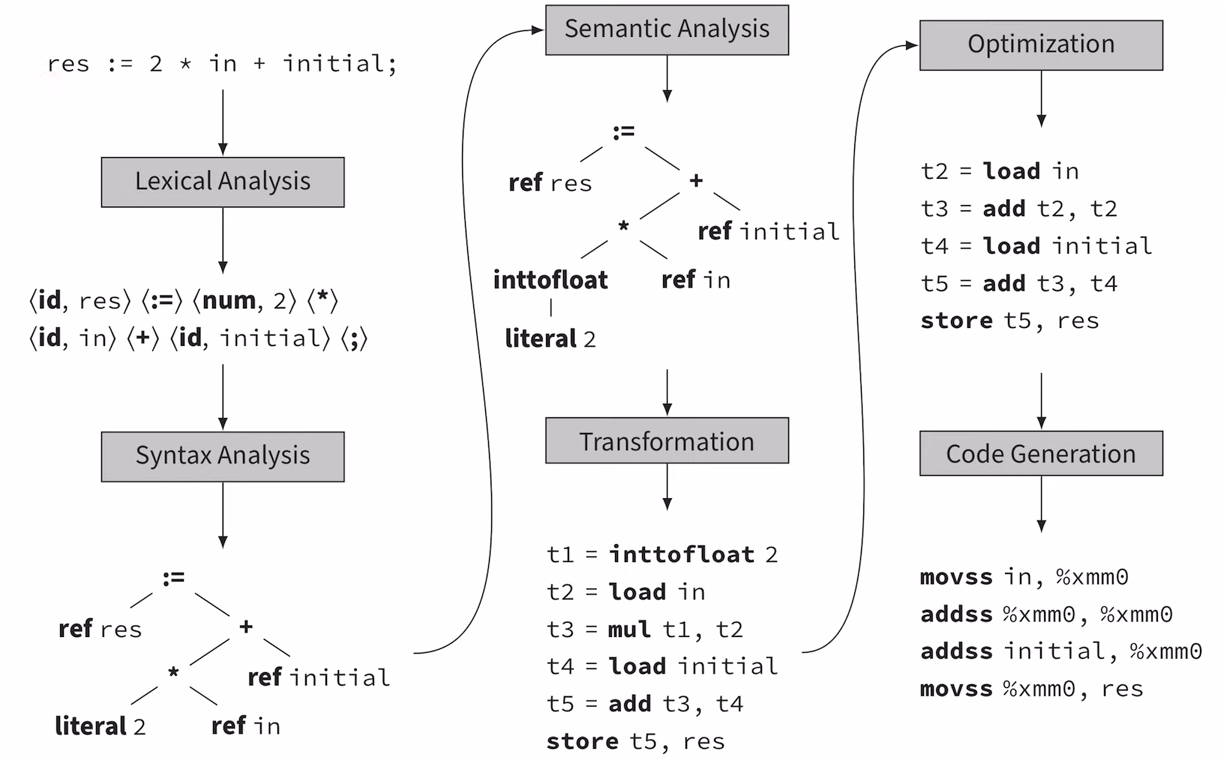

Detailed example

Let's take a look at the following source code:

We will see how it will be compiled into machine code, as it's a basic example.

First of all, lexer will split the source code into tokens, where each token has its value, type and position.

Order | Token | Value | Position |

|---|---|---|---|

1 |

|

|

|

2 |

|

|

|

3 |

|

|

|

4 |

|

|

|

5 |

|

|

|

6 |

|

|

|

7 |

|

|

|

8 |

|

|

|

As you can see, lexer doesn't care about spaces; it just ignores them. (But it's not always the case)

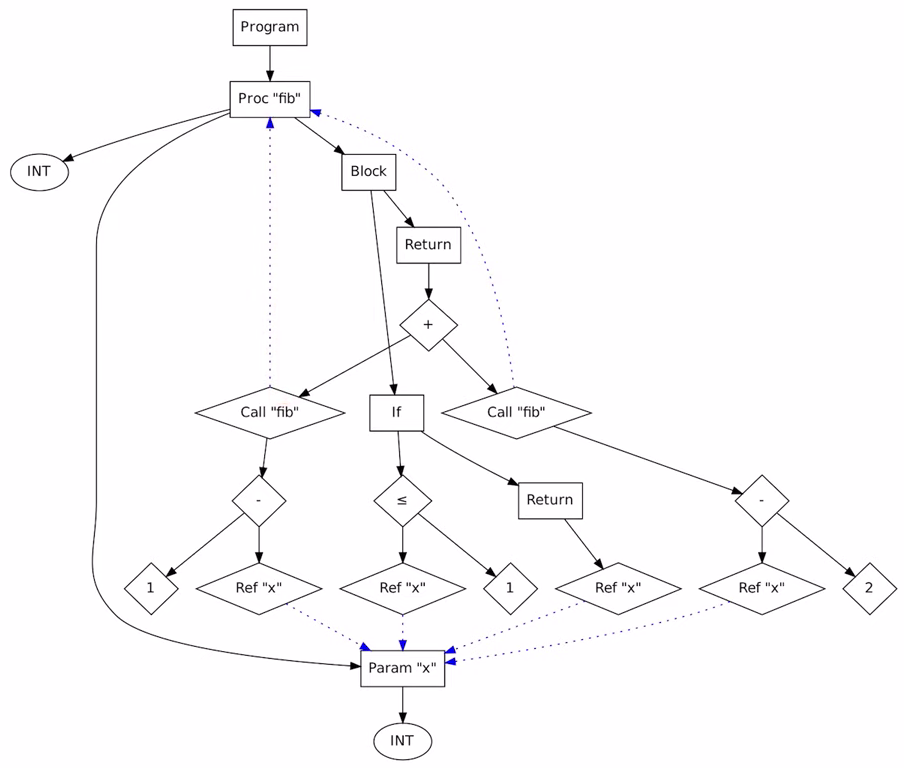

Then, parser will build an abstract syntax tree. The lower the node is, the more priority it has.

After that, semantic analyzer will build a symbol table, which will contain all the information about variables.

But still, most of the information is still contained in the Abstract Syntax Tree, and symbol table is just a helper.

Then, middle-end will transform the AST into a more convenient representation, which is called IR code.

This is staged, where code is almost can be executed (by interpreter), but it's not optimized yet.

After that, middle-end will optimize the IR code.

As you can see, some operations were removed, or replaced by more efficient ones.

Finally, backend will generate the target code.

Afterward, low-level optimizations will be performed, like register allocation, instruction scheduling.

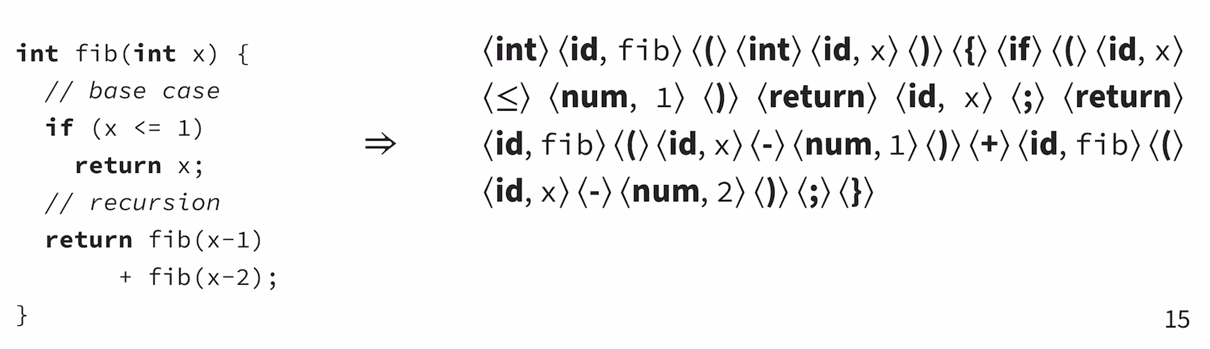

Lexical analysis

Lexical analysis is a process of converting a sequence of characters into a sequence of tokens. As input it takes a string of text (source code).

Each token is meaningful chunk of text. The parser skips some of these tokens, like whitespace, comments, etc.

Example

It's worth mentioning that typically lexer is implemented using an iterator style (one function that returns the next token).

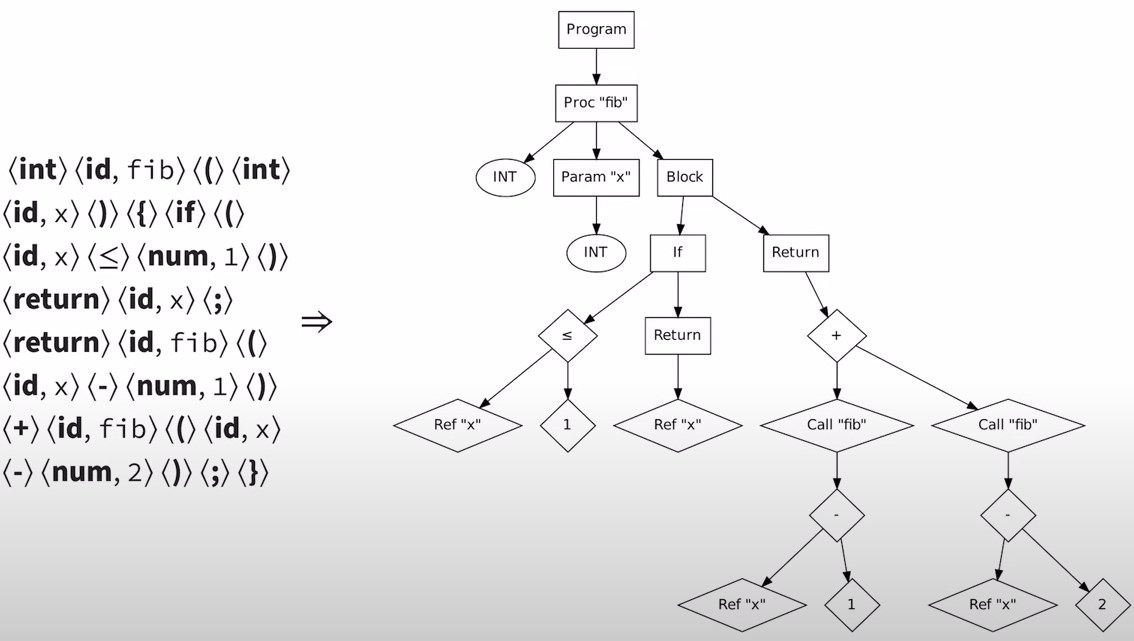

Syntax analysis

Syntax analysis is a process of analyzing the syntax of a program and building an abstract syntax tree. As input it takes a sequence of tokens, which are produced by lexer.

It makes the analysis much easier, as now we can travel through the tree and handle each node separately.

Mention that usually each node is bound to a token, but it's not always the case.

Semantic analysis

Semantic analysis is a process of analyzing the semantics of a program and building a symbol table.

This step is crucial, as it's responsible for type checking and variable scoping. So it extends the AST with additional information.

As you can see, now each name is referenced to a specific declaration, and each declaration has its type.

Transformation Phase

Transformation phase is a process of transforming the attributed AST into intermediate representation.

Sometimes, transformation phase is skipped in older compilers, and they just generate machine code directly.

Implementations

control structures:

if,while,for, etc. are unrolled in

ASM-like code (using labels, jumps, etc.).

operators:

+,-,*,/, for primitive types are replaced by inline assembly instructions (likeadd,sub,mul,div, etc.).functions: are replaced by

callandretinstructions.classes: are completely removed, as they are usually can be already implemented using functions and structures.

arrays: are replaced by pointers, as they are just a syntactic sugar.

etc.

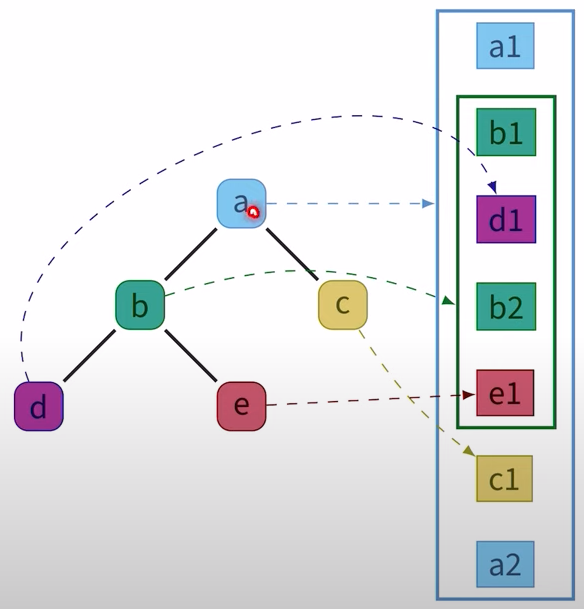

Syntax-Directed Translation

Syntax-directed translation is one of the most common approaches to the transformation phase, as it's very easy to implement.

Basically, when we are building an IR from AST, we can walk through the tree, generating IR code for each node.

In this example, letters represent nodes (like statements, if statements, etc.), and generated IR code is represented as a sequence of named pieces (a1, b1, ...).

The only problem is that generated IR code is very inefficient. It's hard to optimize "across" siblings: peephole optimization to compensate.

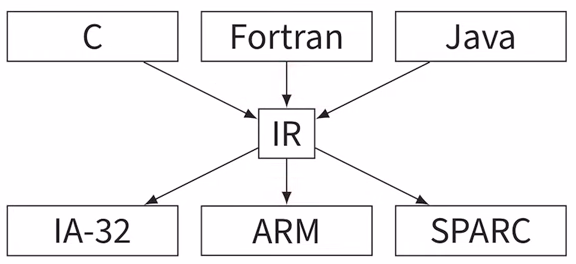

Intermediate Representation Ideas

Assume we want to support k source languages and n target languages.

Then, we would need to implement k * n compilers, which is not very efficient if we want to support all of them.

But instead, we can implement k + n compilers using universal IR, which maybe can affect the performance.

Actually, performance is not a problem, since most of the optimizations can be performed on the IR level.

e.g.: LLVM Bitcode, Java Bytecode, WebAssembly, etc.

Here's an example of Java-Bytecode:

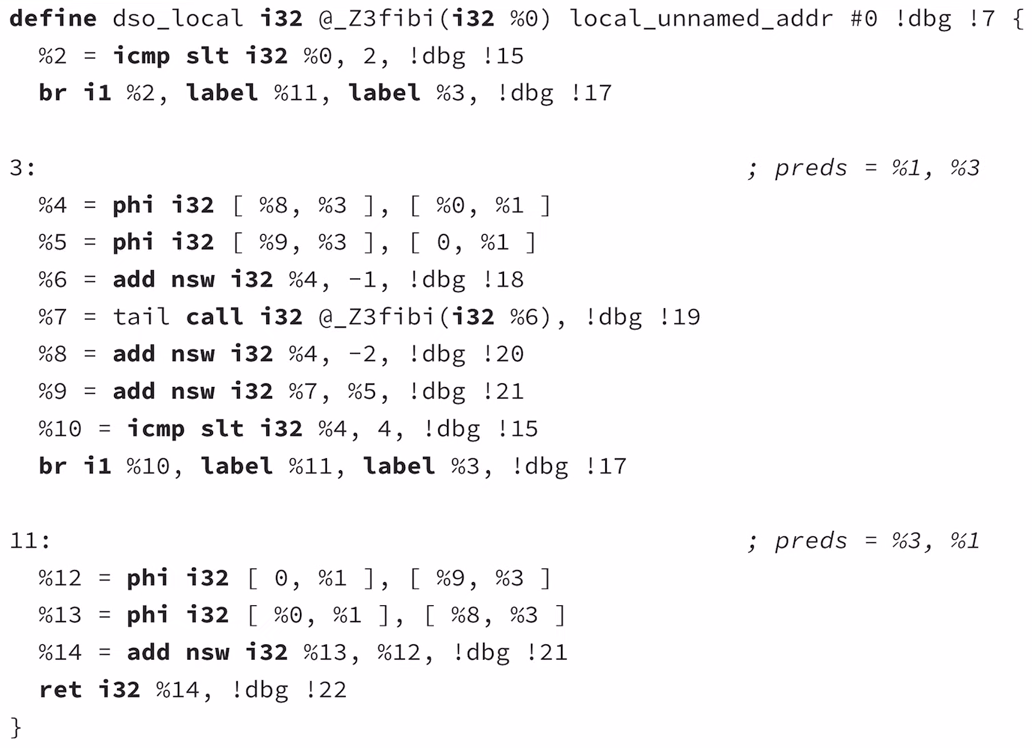

Here's an example of LLVM Bitcode:

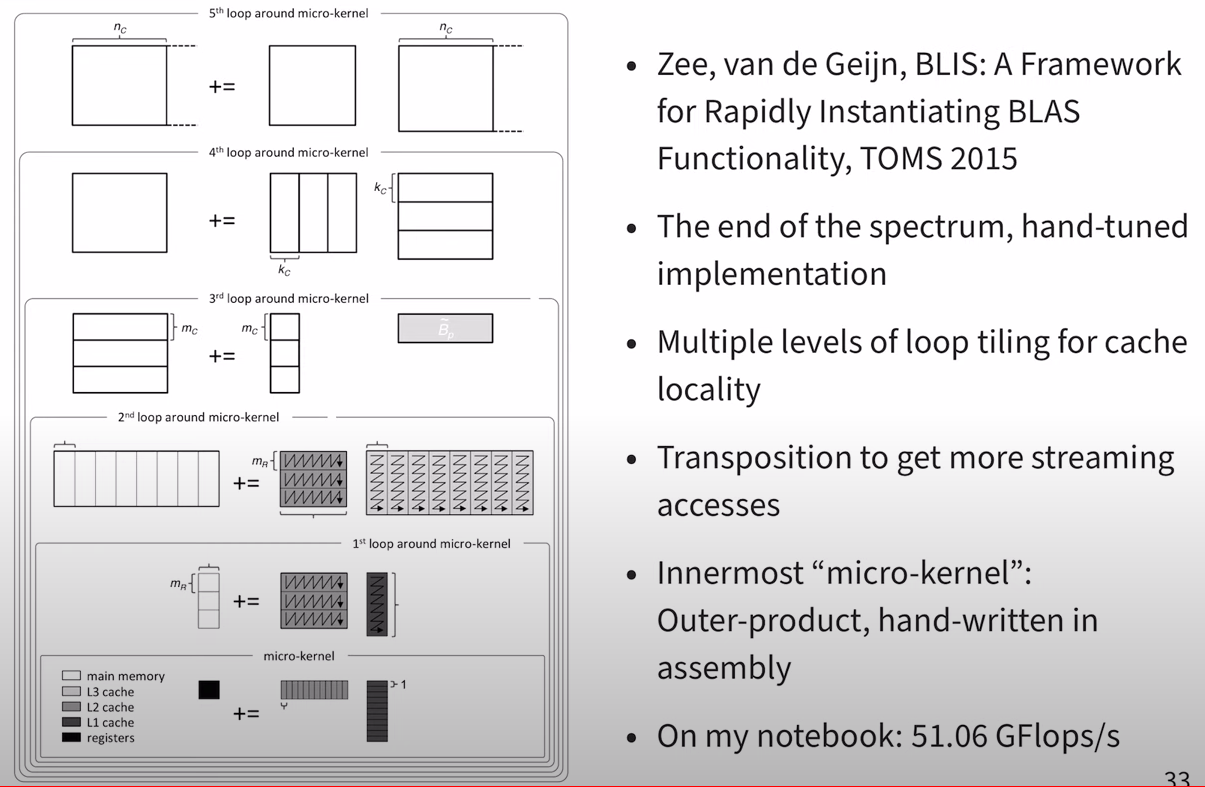

Optimizations

A really nice way to optimize code, depending on the concrete language semantics, is to optimize the IR code.

Optimization has various purposes:

Improve performance: As there are few levels of optimizations, some of them can be performed only with a context of language-specific semantics. (Like inlining in Zig, optimizations in this step are often called middle-level optimizations.)

Prevent programmer bad practices: In high-level languages, programmers are usually not aware of the performance, but more about the readability. For example, in C++ it's nice to use array items with indexes

a[i + 1], but it's not very efficient, as we need to calculate the address of the item every time.Remove overhead from language abstractions: Some language abstractions that have been compiled into IR code by syntax-directed translation may have some overhead, which can be removed by optimizations. (mostly it's the common case for OOP languages)

Separation of concerns: Some optimizations can be performed in several steps. This is done to make compiler development simpler and more structured.

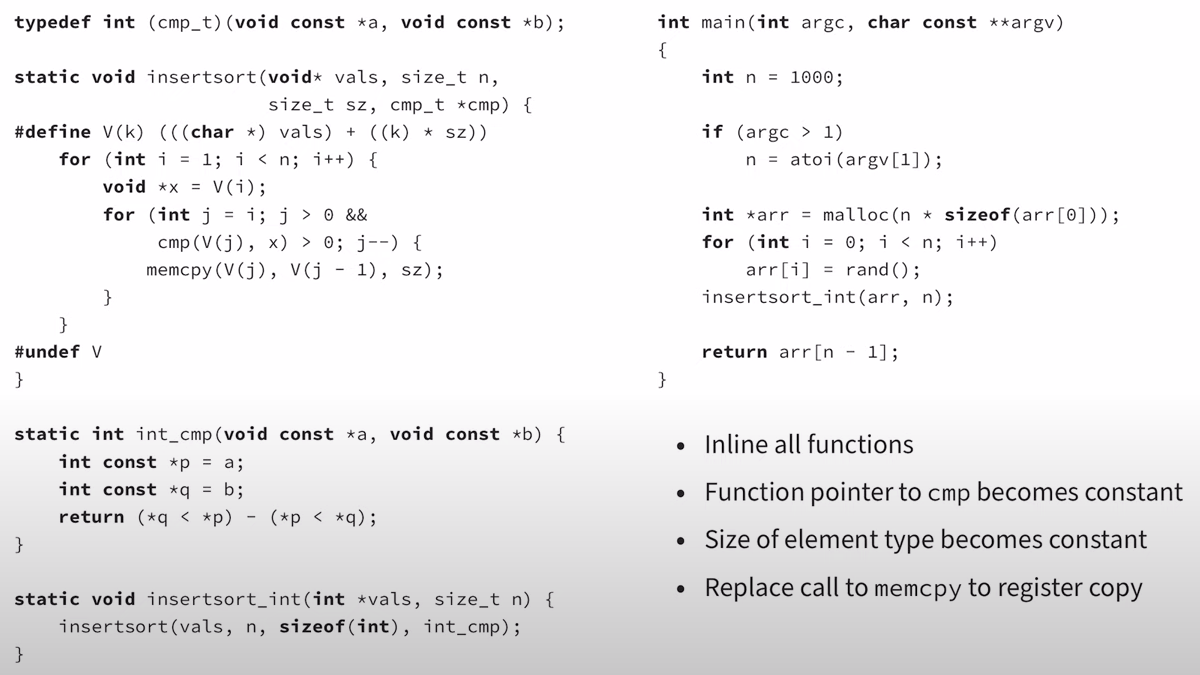

Example 1

In this example, we will see how the following code in C:

The code is not very efficient, as it's using pointers, memcpy, etc. But it doesn't matter, as the compiler will optimize it, as it knows all parameters and their types at compile time.

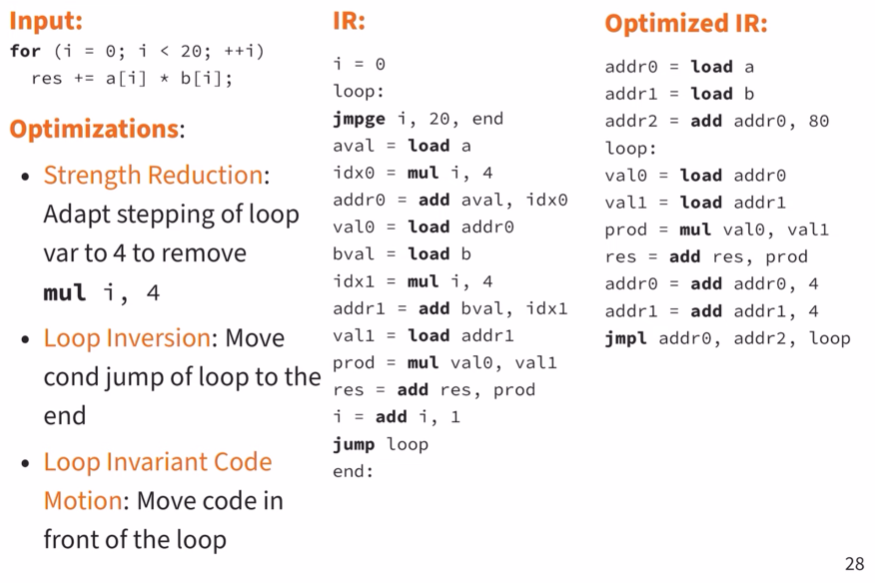

Example 2

Again, let's take a look at the following code in C:

There we're using loop optimization. However, this optimization is included in the LLVM compiler, so we don't need to implement it.

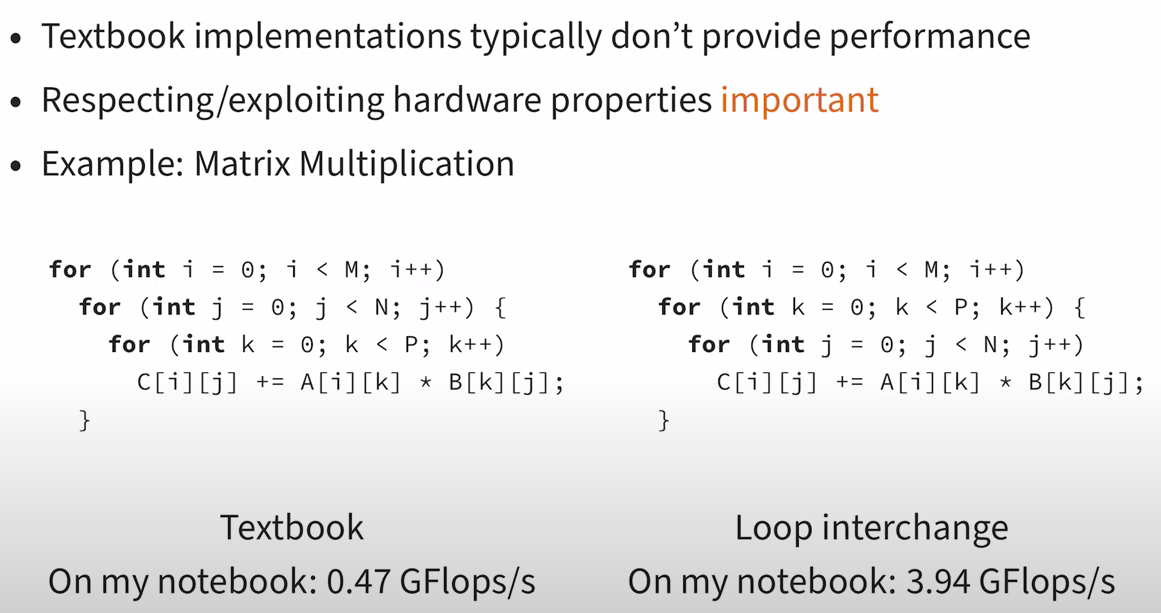

Example 3

Sometimes, optimizations are really dependent on the target machine. In this example, we will see how cache memory can affect the performance.

As you can see, right code is much more efficient.

This is because reading in a row is much faster than reading in a column, as it's much more cache-friendly.

Below is a more detailed explanation.

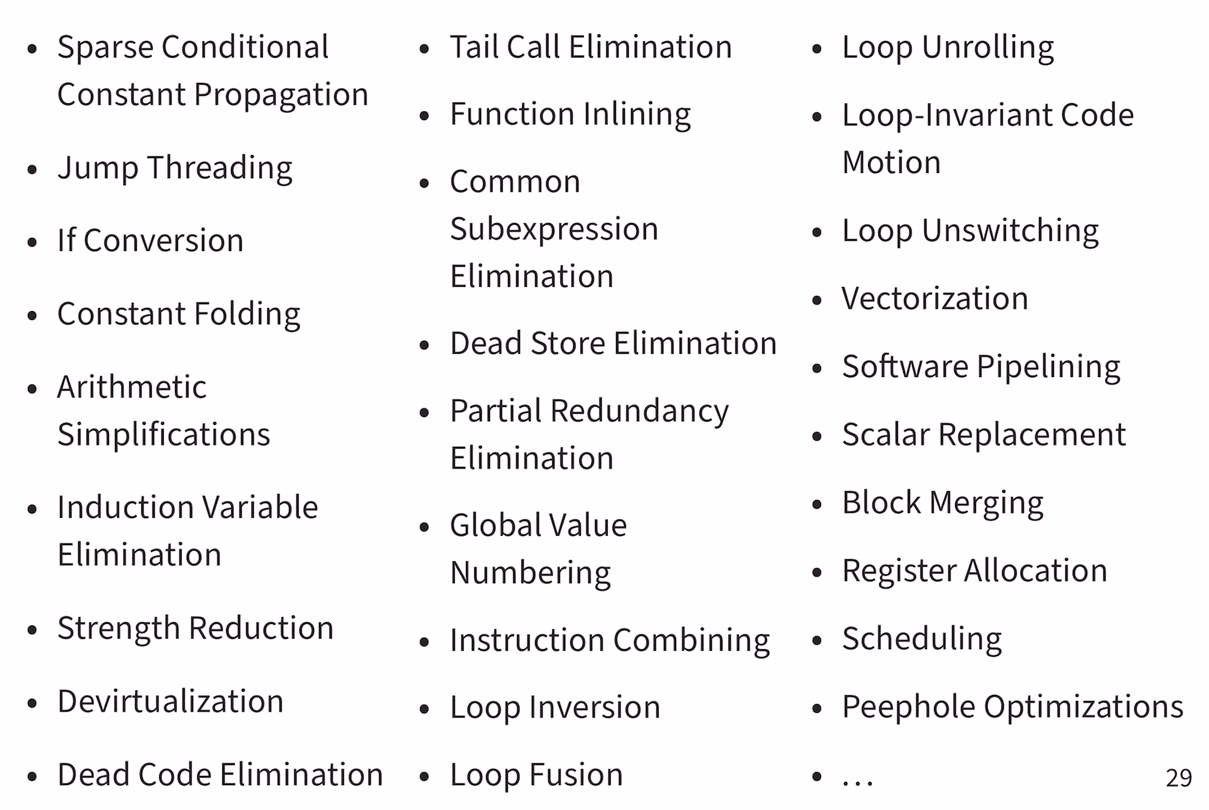

List of optimizations

There are some common optimizations, which are usually implemented in compilers:

Modern challenges

Nowadays compilers are used in many different areas, it's much more complicated than it was before.

Compile time has to be sub-quadratic for the common case. It usually means that compilation time should be linear or at least less than quadratic.

Most relevant code generation problems are NP-hard. It means that most of the optimizations are not possible to be solved in polynomial time. (It's a very complicated topic, if you are not familiar with it, you can read about it here.)

Target machines have resource constraints. We should find a balance between performance, memory usage and compilation time, which also leads to the previous problems.

Target machines are heterogeneous. It means that architectures are different, nowadays, we have multicore CPUs, GPUs. TPUs, ASICs, etc. Some of features are private and not documented, such as branch prediction, cache memory, out-of-order execution, etc.

It's often impossible to give a precise notion of "optimality" with respect to the quality of the code. We, as compiler developers, should also worry about language design, as it's crucial for the readability of the code.

End of Dennard scaling. I'm not sure what authors meant by this (it was mentioned in the lecture).

But I think that it's about the fact that CPU frequency is not increasing anymore, and we should find a way to make our code more efficient.

Next topics

Thanks for reading this topic! I hope you enjoyed it. Contact me if you have any suggestions or questions about this topic.

I'm planning to write more topics about compilers, so stay tuned! In the next topic, we will learn about Lexical Analysis.